| Computers! | |

| T.J. Newton |

|

|

| [UPDATED:

Nov. 14, 2013] Do you hate long division? Most people do. In fact, the only reason we have computers and the Internet and YouTube and Twitter is because humans suck at long division. We suck so bad at it we had to invent the computer to do it for us. This is a brief history of computing. ----- COMPUTERS! ----- Like everything in history, the development of the computer is a patchwork going back thousands of years. The story is full of people - and societies - who didn't know what other people and societies had done, or were doing, or even what their contemporaries were working on. The story is full of weird dead ends and ideas that almost made it. And there's a "corporate asshole" character I'm developing who was born in the 1950's and made it into upper management in 1980's and still works there. We'll meet that person later... So... it wasn't until the 1940's that scholars bothered to look back in time and think about how to build the modern, binary computer. Let's look back in time now... First, there was long division. It gave us things like temples and cathedrals and skyscrapers that didn't fall down. It gave us a way to sail across oceans using the stars and a compass to navigate. It gave us trains and planes and automobiles. But long division was really friggin' hard, and even the smartest people in the world made mistakes. Eventually, the world got so darn complicated we needed people called "computers" to sit in a room and "compute" for us. The first "computers" were people. They were responsible for things like making sure buildings didn't fall down, making sense out of census data, figuring out who owed money to the bank, and, of course, plotting a course across oceans. When these "computers" messed up, stuff went wrong. So, tools were developed to help them. There was the abacus and the slide rule, and all sorts of math "tables." (Those tables had no legs, though!) But the modern computer was cobbled together from more than just that stuff! |

||

|

||

| An abacus (left) and a slide rule (right) | ||

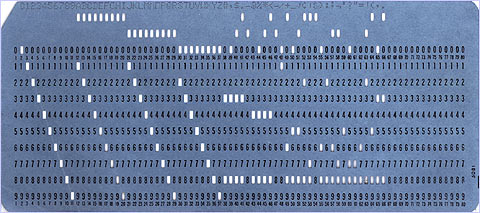

Then there was the punch card which, in the context of computing, was like an early DVD or hard drive. But it was first developed for looms (which sewed intricate patterns for fabrics), and the loom people never talked to the railroad people, who started "punching tickets" to keep customers from trying to take a longer train ride than they paid for. Don't worry, some smarty pants is eventually going to put all this together, but not yet! |

||

|

||

| A punch card | ||

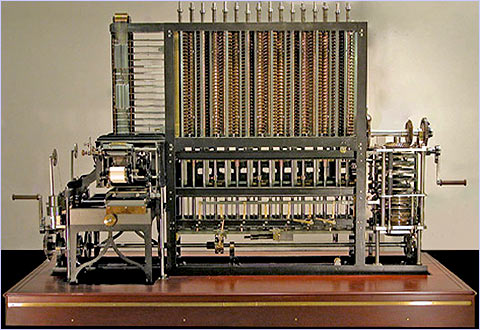

And, of course, there was Charles Babbage, who noticed the error rate among these "computers" (the people that did long division for banks and governments, in this case in Britain) and invented a machine that could actually do long division! But he was ahead of his time, and it was such a complicated endeavor, the project got dropped. It wasn't until the 2000's that computer historians finally built Babbage's machine and showed that it would have worked if the world had just been a little more patient with him. Babbage is important because those early computer scholars looked up what Babbage did when they invented the modern computer... |

||

|

||

| Babbage's computer | ||

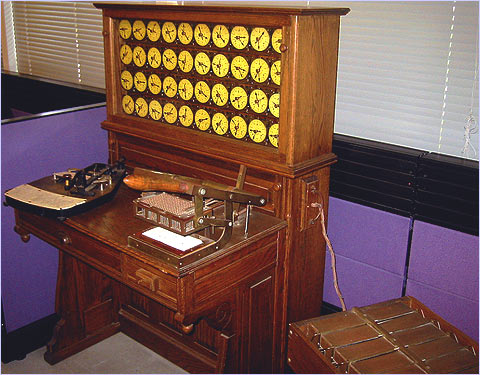

Anyway, the railroad "punch card" inspired a guy who had a contract with the U.S. government. The government wanted him to build a machine that could somehow make sense out of census data. The census, of course, is sort of a head count of all the people who live in America. And it asks about things like race, who owns a house, and how many men and women there are. By the late 1800's, it was taking almost 10 years to get all that data sorted out. And by the time it was done, it was useless, because everything had changed and it was time to take a new census. So, we got the "tabulator." It could add and subtract (and multiply in certain ways). And it used "punch cards" to read the data from the census. A punch card was just a piece of stiff paper with little holes punched in it. The census punch cards might say, "Do you own a house? [YES] [NO]" and there would be a hole punched for YES or NO. Except the questions weren't actually printed on the card. All of that is a super-boring part of the story. You need to know that the machine could tell which hole was punched, and it could add all of the home owners together for a "grand total" (like Excel), as well as sort the card into a little bin that contained only cards with homeowners (that little bin is a database - like Access). You get the idea... the tabulator! |

||

|

||

| A tabulator | ||

The tabulator was a hit, and this guy went on to start IBM and sell tabulators to businesses, which businesses loved because now they could figure out how many Fords were coming off the assembly line that were either coupes or sedans or whatever. These massive companies were able to start getting their head around what the heck they were doing! Still no long division, though. And boy did they want it! Babbage, the inventor of the computer (which could, of course, do long division), had been forgotten. There were a few people working on machines that could do long division in the early 1900's, but it was obscure work. It took a war for humanity to finally get it together. World War II to be exact. By now, there were airplanes, and during wars, they dropped bombs. Hold on while I explain why that required long division... If bombs had been dropped from helicopters, they could have hovered over their target and the bomb would have dropped straight to the ground. But since they used airplanes, they couldn't hover. Airplanes can't hover. So, when the bombs got dropped out of the plane, they didn't fall straight down. They fell to the ground, for sure, but they also moved in the same direction the plane was flying, sort of like when you throw something out of a moving car. So guess what they needed to figure all this out? Long division, of course! Even the smartest people in the world - the "computers" - would occasionally screw up on long division, and a bomb would get dropped in the wrong place. But more importantly, it took forever to do all of that long division. Just like taking the census before the tabulator was invented, it was taking so long to do the math that by the time they figured out where to drop a bomb, the enemy had moved somewhere else. It didn't take a genius to figure out that the tabulator needed to be able to do long division (actually, it took a massive government bureaucracy to figure that one out)! So, now it all gets put together. The abacus. The slide rule. The tables. The punch cards from looms and railroads. Babbage's early attempt at a modern computer. The tabulator. Some binary contraptions from the early 1900's... It was like an awakening! And, among other creations, out popped the binary punch-card computer! It was a lot like the punch-card tabulator, except it could do long division. And there's an interesting story about electrical circuits and binary numbers and how the tabulator worked that's important if you're curious about how they did it. I've tried to put it together for you in the blue box below, but if you're not interested, you can skip the part in the blue box. The point is the first computers did tasks like Excel and Access, and IBM ended up selling this new "computer" to businesses after the war. It's worth noting that there were other computing devices that came out of the war that were less like the tabulator and were being worked on by universities and governments. But IBM probably had a lot of tabulator customers who were waiting on the computer, helping foster its success. The computer! |

||

|

||

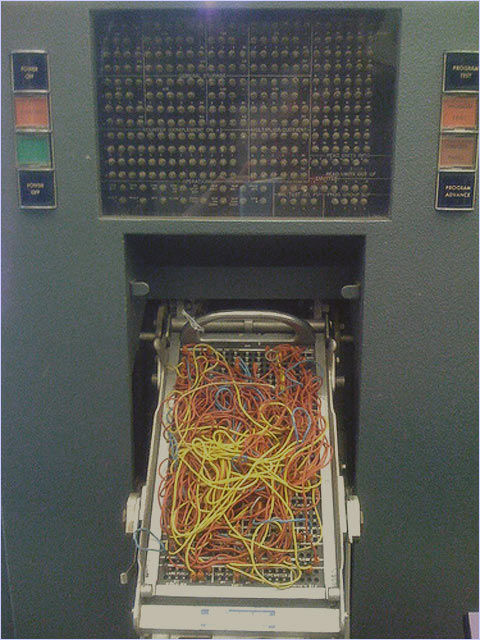

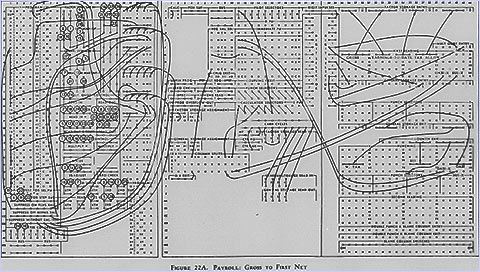

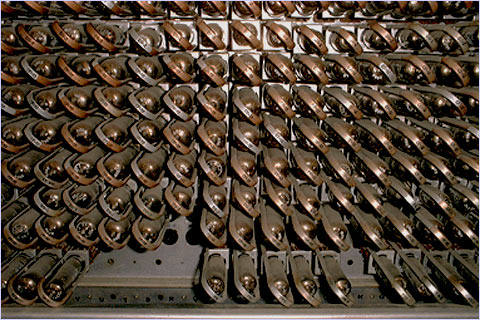

It was 1948. This new "computer" had a punch-card-making machine (with a keyboard, or at least a "push-button interface") that isn't shown in the photo below, and it's really boring and hard to understand what was going on. In addition to punch cards, "applications" used "plug boards," also called "control panels," which had to be wired by hand. You could remove and insert different boards. Each one of these "applications" could read various punch cards. It's important to keep in mind that this computer evolved from the punch-card tabulator. It had "bugs," too. Bugs literally landed on the "vacuum tubes" of an earlier prototype (vacuum tubes were part of the "circuitry"). That's the way the story goes, or so I've heard. (It looks kind of "streamlined" or "art deco" or something, doesn't it? Still slick, though!) |

||

|

||

| The IBM 604 - The first commercially available computer | ||

|

||

| Restored photo of a preserved IBM 604 | ||

|

||

| The front of the IBM 604 showing the display panel (top) and the plug board (bottom) | ||

|

||

| An IBM 604 plug board (also called a "control panel") | ||

|

||

| A plug board / control panel wiring diagram from the IBM 604 manual | ||

|

||

| The vacuum tubes of an IBM 604 | ||

|

||

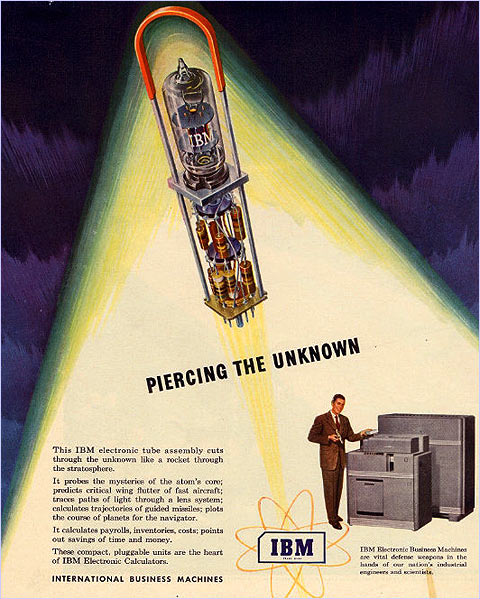

| An IBM 604 advertisement | ||

|

||

| An IBM 604 advertisement | ||

|

||

| An IBM 604 advertisement | ||

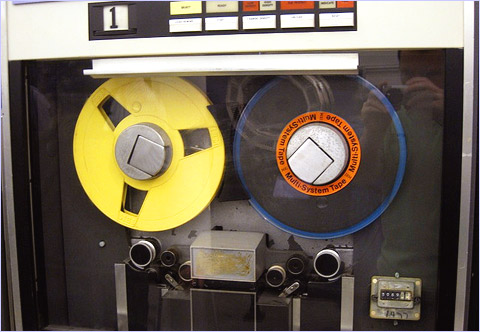

The 1950's added an early hard drive/RAM combo sort of thing called "drum memory." It appears to have worked a little like RAM in that it stored multiple punchcards for processing, but it ran like a hard drive in that it spun and was magnetic as opposed to electronic - like very slow RAM. The 50's also gave us the tape drive, which was a lot like a hard drive as we know it today. That tape drive and drum memory combination become important as computers develop. Keyboards and printers also appeared in the 50's, and there were early attempts at programming languages. The mouse and computer "sounds" were invented in the 1950's, but didn't really appear until later. Screens (monitors) appeared, but were probably specific to military applications such as radar, or used on experimental systems not for sale commercially. |

||

|

||

| An early tape drive | ||

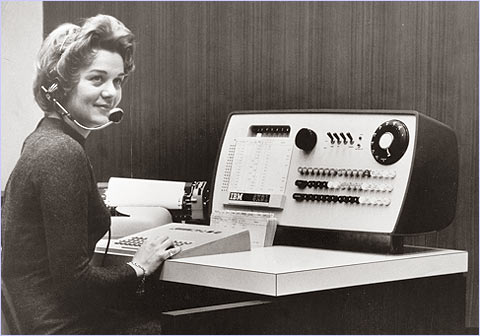

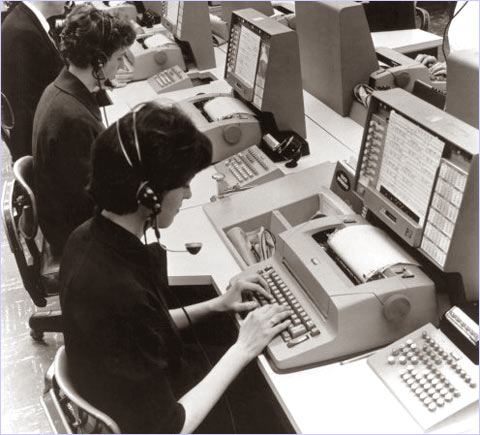

The 1960's gave us the first computer "networks," and the "Internet" was invented in 1969. A lot of the stuff from the 50's started to make more of an appearance, too (after the bugs were worked out). In fact, the very first computer network appears to have become operational in 1958. One of the concepts behind the network was the use of devices that could access the "mainframe" from remote locations. The "mainframe" looked a lot like the IBM 604 pictured above, except they were getting bigger instead of smaller. |

||

|

||

A 1960's mainframe |

||

Let's say you needed to work on some computer data, but you were nowhere near the "mainframe" - say you were in a "field office" - what could you do? A number of 1960's computer networks initially adapted teleprinters and teletype machines to put in remote locations and access the mainframe. Photos of those adapted mainframe teleprinter devices are rare, in black and white, and specific to one commercial computer network (American Airlines' SABRE network). However, the photo below is of a Telex teleprinter from the early 1960's, and looks very similar to a prototype photo of what would become the SABRE terminal, made by IBM. The network! |

||

|

||

| A 1960's teleprinter | ||

|

||

| SABRE teleprinter/terminal prototype | ||

|

||

| Early SABRE teleprinter/terminal | ||

American Airlines' SABRE network was based on the military's SAGE network. Like SABRE, SAGE used adapted teleprinters and a mainframe, and was built by IBM. It became operational in 1958. SAGE was designed to connect radars, so it had a radar "screen." |

||

|

||

| Early SAGE operator's console | ||

MIT's DEC PDP-1 - an experimental machine - was the fastest computer in the world in the late 1950's, and appears to feature something approaching a monitor. It also ran the world's first real-time computer video game, called SpaceWar, which preceded the better known game Pong. |

||

|

||

| DEC PDP-1 operator's console | ||

In 1964, IBM came up with the "dumb terminal," which looked like an early PC, but couldn't do anything on its own. It was designed to be hooked to the "mainframe," and "existing telegraph and telephone cables" were used. |

||

|

||

| A 1960's terminal | ||

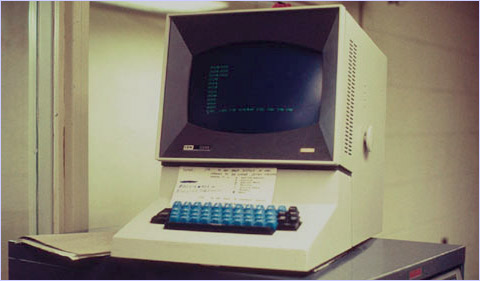

That thing (the terminal pictured above) wasn't a computer! But you can see the inspiration... I mean, it looks like a friggin' computer! I think the one above is one of the types first developed by IBM in 1964. In any case, these things had to be hooked up to one of those giant "mainframes" to do anything. The Internet was based on the "network." Basically, the military saw all of this and thought (in their own dark sort of way), "What if someone bombed the mainframe?" So, their "network" was designed to survive a nuclear war. The Internet! The bugs with the vacuum tubes were worked out in the 1960's, too, thanks to the transistor (as used in manufactured models). The modern database started to take shape in the 1960's as well, which was helped along by the tape drive (which stored the data) and the "dumb terminal" (which let anyone, anywhere get at the database). The first corporate and government computer databases started in the 1960's... Back in the 60's, people didn't know how everything was going to turn out. Your company might buy that new computer with the tape drive, but they also made ones without tape drives. So, there were still punch cards everywhere. But I mean, the world as you know it today was now here, just on a much smaller and simpler scale. By the 1970's, punch cards were being gradually phased out, and "computer science" was getting big. Databases galore, modern programming languages, networks everywhere, microchips in devices... Oh, yeah, the microchip! Those old computers were HUGE and expensive! That was one of the reasons for the network and the "dumb terminal." But as the 1980's (which we love) dawned, somehow that dumb terminal got together with a processor and gave us "micro-computing" ("micro" meaning "small") That's what the PC (and the Apple) was called back then. The micro-computer! |

||

|

||

| An early micro-computer | ||

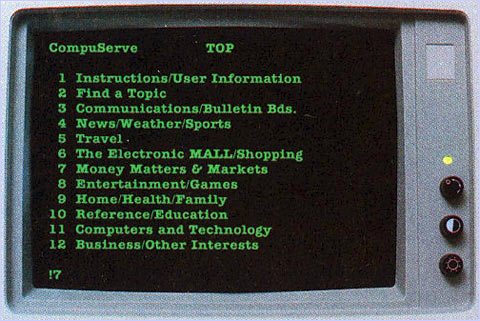

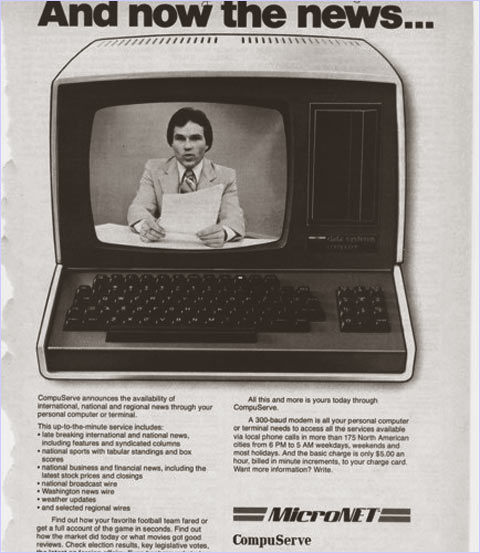

It looks familiar, doesn't it? This one you could just plug in and do stuff. No "mainframe" was required. So, here it finally is! I think those are punch card readers on the right... (They're not, they're "disk drives," which are similar to DVD devices.) Early micro-computers could be connected to existing computer networks in the workplace, and "dial-up networks" or "bulletin board networks" were popular among home users prior to the entry of the Internet into home computing. CompuServe, which began providing networking services to businesses using a dial-up time sharing system in 1969, entered the U.S. home dial-up market in 1978. By the early 1980's, CompuServe featured a dial-up encyclopedia for home use, news, and shopping. |

||

|

||

| A rendering of a CompuServe home screen, apparently in a print ad aimed at the home computing market | ||

|

||

| An early CompuServe advertisement aimed at the home computing market | ||

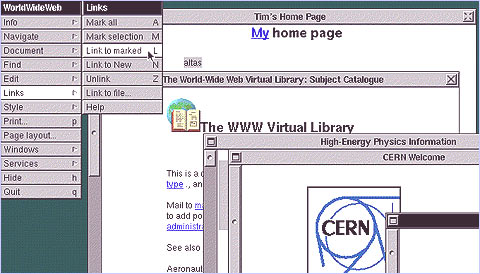

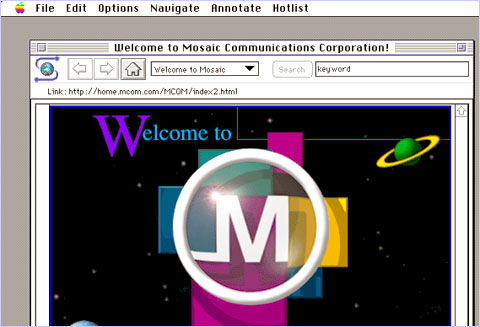

Now it's time to meet our "corporate asshole" character. He's a manager at some huge company in 1980's America who believes that he's "self made" (probably voted for Reagan), and he doesn't understand bombing the rest of the world's factories in WWII (thanks to the computer) played a role in his success. In other words, his head's so far up his ass it isn't even funny! (It's funny!) Anyway, he's stuck with a database dating back to the 1960's (or 1970's, but you get the idea). It's the 1980's and "micro-computers" are the new thing. Lucky for him, there's this application (an app!) - let's call it "Terminal" - that makes it possible to pull up that old database on his new micro-computer. (The old database might still be on a tape drive in another city, but hopefully he upgraded during the 1970's...) Now, applications like Excel and Access can be used to do all sorts of new things with the old data (Excel and Access went by different names back then, but same idea). It was a pain, though, and he had to pay someone, and he wasn't even sure about this whole micro-computing thing. His company starts to think about things like using the billing database for direct marketing. Maybe... A decade goes by... (damn those things go by fast!) It's the 1990's! Yay! The 90's! By the 90's, micro-computers are in every cubicle (good thing he was indecisive, or he'd be out of a job), and the military has decided to allow the public to use its nuclear-bomb-proof Internet. So, the web browser comes out in the early 1990's, and someone at his company figures out how to pull up his old 1960's database on the web. "Apps" are developed. It's bliss. It's synergy. His 1960's database can be accessed in so many new ways, and this Internet thing, too... Please keep in mind that I'm not singling out any one person or company. This guy is just a character... Now quit looking at porn and sign those paychecks! |

||

|

||

| The world's first web browser was key to making the web part of the Internet. | ||

|

||

| Version 1.0 of the Mosaic web browser | ||

The corporate asshole's time on this post has come to an end... So, now the web has to work with all the stuff that's been rolled out. It's sometimes a bumpy road. But like the history of computers, it has a facinating story all its own. Maybe someday I'll do post on that (not anytime soon, though!). |

||

|

||

Related: - http://www.cnn.com/2011/TECH/innovation/06/15/ibm.anniversary... - http://www.computerhistory.org/revolution - http://www.columbia.edu/cu/computinghistory - http://en.wikipedia.org/wiki/Computer - http://en.wikipedia.org/wiki/IBM - http://en.wikipedia.org/wiki/Punched_card - http://www.computerhistory.org/revolution/punched-cards/2 - http://www.computerhistory.org/revolution/calculators/1 - http://www.computerhistory.org/revolution/analog-computers/3 - http://www.computerhistory.org/revolution/birth-of-the-computer/4 - http://en.wikipedia.org/wiki/Charles_Babbage - http://www.computerhistory.org/revolution/early-computer-companies/5 - http://www.computerhistory.org/revolution/real-time-computing/6 - http://www.columbia.edu/cu/computinghistory/tabulator.html - http://en.wikipedia.org/wiki/Tabulating_machine - http://www.columbia.edu/cu/computinghistory/hollerith.html - http://www.columbia.edu/cu/computinghistory/plugboard.html - http://www.columbia.edu/cu/computinghistory/604.html - http://en.wikipedia.org/wiki/IBM_604 - http://en.wikipedia.org/wiki/IBM_603 - http://en.wikipedia.org/wiki/Drum_memory - http://www.computerhistory.org/revolution/memory-storage/8 - http://mtl.math.uiuc.edu/non-credit/compconn/bits/binary.html - http://computer.howstuffworks.com/boolean6.htm - http://www.allaboutcircuits.com/vol_4/chpt_7/2.html - http://www.computerhistory.org/revolution/mainframe-computers/7 - http://www.computerhistory.org/revolution/input-output/14 - http://en.wikipedia.org/wiki/Computer_music - http://en.wikipedia.org/wiki/IBM_701 - http://en.wikipedia.org/wiki/IBM_System/360 - http://en.wikipedia.org/wiki/PDP-1 - http://plyojump.com/classes/mainframe_era.php - http://en.wikipedia.org/wiki/IBM_2260 - http://www.columbia.edu/cu/computinghistory/2260.html - http://en.wikipedia.org/wiki/IBM_3270 - http://www.columbia.edu/cu/computinghistory/2250.html - http://en.wikipedia.org/wiki/Semi-Automatic_Ground_Environment - http://en.wikipedia.org/wiki/Sabre_%28computer_system%29#History - http://www.wired.com/wiredenterprise/2012/07/sabre/?pid=133#slideid... - http://en.wikipedia.org/wiki/Teleprinter - http://en.wikipedia.org/wiki/Integrated_circuit - http://en.wikipedia.org/wiki/Internet - http://quickbase.intuit.com/articles/timeline-of-database-history - http://en.wikipedia.org/wiki/Database - http://en.wikipedia.org/wiki/Computer_network - http://en.wikipedia.org/wiki/CompuServe - http://en.wikipedia.org/wiki/Microcomputer Artwork (may include photos, images, audio, and/or video): - http://www.vhinkle.com/china/inventions.html - http://web.mit.edu/museum/exhibitions/sliderules.html - http://en.wikipedia.org/wiki/Punched_card - http://www.computerhistory.org/babbage - http://www.flickr.com/photos/iannelson/143443768 - http://www.computerhistory.org/collections/accession/102645462 - http://www.piercefuller.com/library/p8041009a.html?id=p8041009a - http://farm3.staticflickr.com/2405/2480925747_41b6b6fd11.jpg - http://www.computerhistory.org/collections/accession/102656684 - http://www.ii.uib.no/~wagner/OtherTopicsdir/EarlyDays.htm - http://www.science.uva.nl/museum/604.php - http://www.computerhistory.org/revolution/early-computer-companies/5... - http://www.columbia.edu/cu/computinghistory/ibm-pu-ad.gif - http://ed-thelen.org/comp-hist/vs-ibm-604-ad.jpg - http://www.flickr.com/photos/44147515@N08/4054327775 - http://plyojump.com/classes/mainframe_era.php - http://www.baudot.net/teletype/M32.htm - http://www.wired.com/wiredenterprise/wp-content/uploads//2012/07... - http://plyojump.com/classes/images/computer_history/sabre_reservation... - http://en.wikipedia.org/wiki/File:SAGE_console.jpeg - http://www.aes.org/aeshc/docs/recording.technology.history/images5... - http://en.wikipedia.org/wiki/IBM_2260 - http://www.n24.de/media/_fotos/bildergalerien/002011... - http://www.computerhistory.org/revolution/the-web/20/400/2337 - http://cdn.inquisitr.com/wp-content/compuserve.jpg - http://webdesign.tutsplus.com/articles/industry-trends/a-brief-history... Special Thanks: - A lot of this was worked out years ago with two high school teachers, and later, two college professors, three students, and a number of bosses and coworkers. I know they'll at least like the binary and light switches part... |

| © 2013

by T.J. Newton. All Rights Reserved. A more detailed copyright policy should be forthcoming. It will probably be similar to this one. |

|